XKCD Finder

By Luke Miller

- 10 minute read - 1933 wordsXKCD comics have become a cornerstone of internet culture, particularly in technical circles, with their witty takes on science, programming, and mathematics. However, finding the perfect XKCD for a particular topic or reference can be challenging - there are now over 3,000 comics in the archive, and traditional search methods rely heavily on exact keyword matches or remembering specific comic numbers.

This project explores how modern Natural Language Processing (NLP) techniques can be used to search XKCD comics semantically, understanding the underlying meaning rather than just matching keywords. By applying vector embeddings and Retrieval Augmented Generation (RAG) to comic descriptions, we can now perform a search based on concepts, themes, and abstract ideas.

In this article, I’ll walk you through the technical journey of building this system, from collecting data to visualizing results, while highlighting the key innovations and methodologies that make it all possible. Whether you’re a seasoned Machine Learning enthusiast or just a fan of XKCD, there’s something here for everyone to enjoy. So, let’s dive into the world where humour meets technology, and see how RAG can enhance our comic strip adventures!

Do drop a star (⭐) on the GitHub project page if you enjoy reading this article.

(18th July 2025) EDIT: A fun demo of this is now available at xkcd-finder.com. Enjoy!

XKCD Dataset

XKCD comics, created by Randall Munroe, are more than just simple stick figures - they often contain complex ideas and multi-layered jokes that require significant context to fully appreciate. However, navigating through this extensive archive can be daunting, as they stand at more than 3,000 in number.

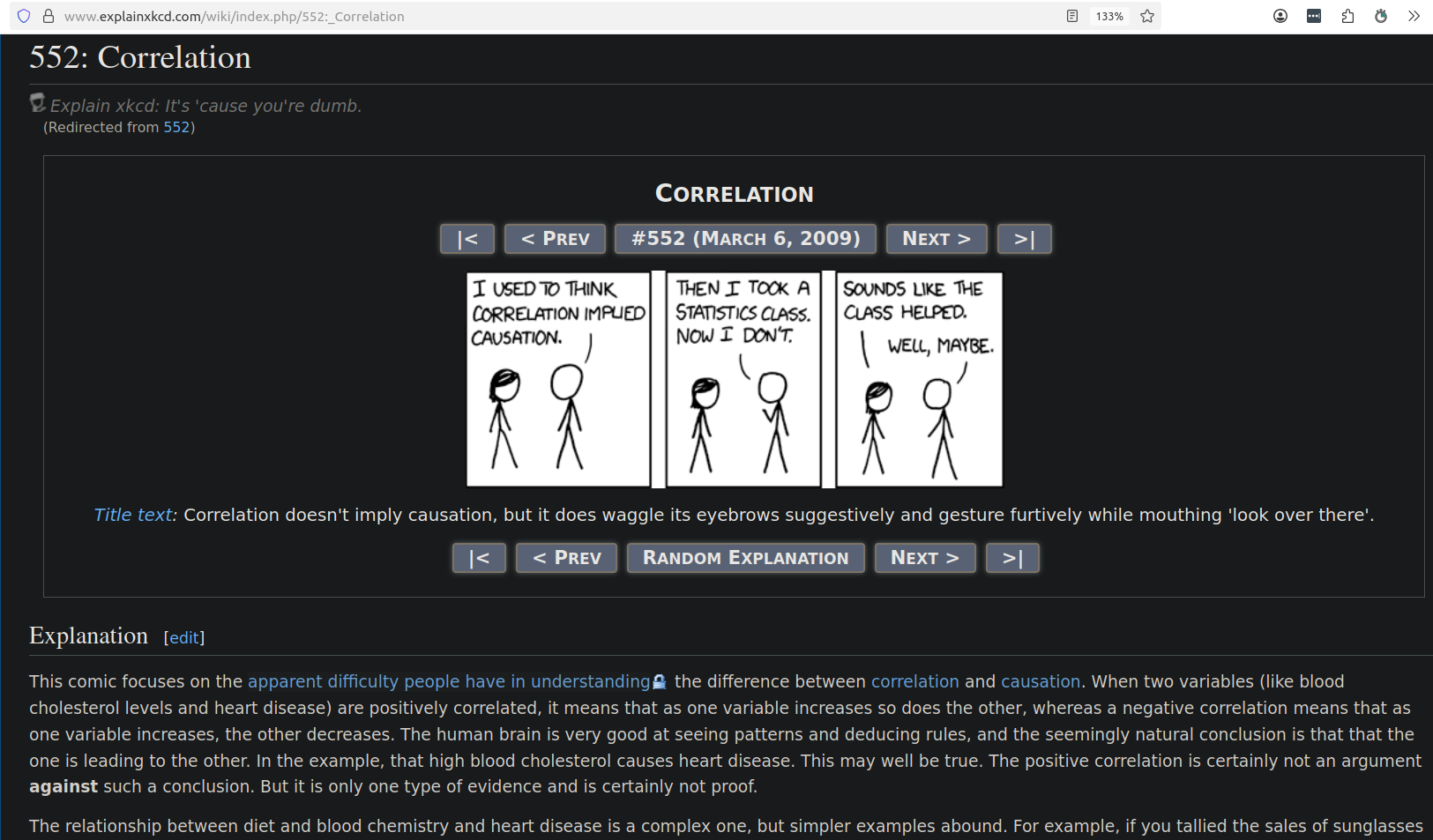

Fortunately, the community at Explain XKCD has created detailed explanations for almost every comic, breaking down the references, scientific concepts, and cultural context that make each one meaningful.

As a part of this project, I built a data harvester to collect these explanations, which serve as rich textual representations of the comics themselves. The explanations typically include:

- a basic description of what’s happening in the comic,

- technical or cultural references being made,

- mathematical or scientific concepts being explored,

- hidden jokes or ‘alt-text’ explanations,

- related comics and themes,

- the comic’s full transcript.

Here’s an example:

The main observation is that the information in the explanation is much richer than a plain description of the image content itself, as it contains analysis of what happens throughout the comic. This will ultimately allow us to more effectively navigate the space of XKCD comics.

The data are stored as simple JSON blobs such as in this example:

{

"comic_id": 42,

"title": "Geico",

"image_url": "https://imgs.xkcd.com/comics/geico.jpg",

"explanation": "This comic references a long-running ad campaign for Geico insurance in which a character (different in each commercial) lists a series ...",

"transcript": "[Cueball holding a golf club.]\nCueball: I just saved a bunch of money on my car insurance by threatening my agent with a golf club."

}

Note: Data harvesting originally involved data scraping, but for anyone who wants to run the code in this project themselves,

the functions now point to a mirror in my open S3 bucket - s3://lukerm-ds-open/xkcd. Please configure your credentials

first before attempting to download the data. This is out of politeness to the Explain XKCD website.

Populating the Vector Database

The heart of semantic search is the vector database, where we store the embedded representations of each comic’s explanation and transcript (separately). I chose Weaviate as the vector database for this project due to its robust Approximate Nearest Neighbour (ANN) search capabilities and straightforward Python client.

Getting started was as simple as spinning up a Docker container from the weaviate

registry. This enables to start interacting with database on port 8080.

When populating the database, I took advantage of OpenAI’s text-embedding-3-small model to transform the textual

descriptions into vector representations of dimension 1,536. This embedding process occurs “on the way in,” meaning that

as we pass in the data, it is embedded automatically by Weaviate’s integration with OpenAI’s model APIs, via the

text2vec-openai module. Additionally, we store comic metadata, such its ID and title.

This project’s population script enables both bulk insertion (the entire corpus), or a specific set of comic IDs for testing. Note: my method is duplicate safe since it will upsert by default, with an identifier derived from the comic ID which is unique to it.

Overall, assuming the data were available locally, it took just a couple of minutes to populate the embeddings into their dedication collection. The main bottleneck was retrieving the vectors from the API - this could be improved with asynchronous processing, but it did not feel worth it for this scale (plus the background risk of running into request limits would inevitably cause more complexity!).

With the database populated and ready-to-go, it’s time to start searching through the comics semantically - which we’ll explore in the next section on Retrieval.

Retrieval

Once the comics are embedded in the vector database, we can perform semantic searches using a query-by-text approach. The process is straightforward: at query time, the search program

- embeds the user’s search phrase using the same

text-embedding-3-smallmodel by OpenAI, - searches the vector index via Hierarchical Navigable Small World1 (HNSW), an efficient graph-based ANN algorithm,

- and simultaneously performs a sparse keyword search, combining the two in what is known as “hybrid search”

- returns the

Nnearest comics ranked by cosine similarity (three by default).

The power of this approach becomes apparent when searching for abstract concepts. For example, searching for “superintelligence” returns comics about possible future events relating to AI, even if the phrase never appears in the original texts - in this example, Robot Future and Judgment Day are returned as hits 3 and 4, despite not containing the keyword.

Currently, what I have described so far is known as Information Retrieval. To enhance this further, the next step is to enable Retrieval Augmented Generation (RAG) which endows the large language model’s context with the information found in the search, allowing it to respond naturally to a request, grounded with this extra knowledge that it might not have had otherwise.

In this setup, the RAG engine will provide shortened, natural explanations of the comic itself and how each comic relates the query. This helps users not only find relevant comics, but also to understand the semantic connection to their search term.

Note: generation is possible by enabling the generative-openai module in Weaviate on boot-up.

Let’s see this powerful tool in action. First, check the database is available:

$ cd ~/xkcd-comic-finder/ && export PYTHONPATH=.

$ python -m src.database.weaviate_client --test-connection

2025-06-24 17:54:18,827 - INFO - Connecting to Weaviate at http://localhost:8080

2025-06-24 17:54:18,836 - INFO - Connected to Weaviate successfully

2025-06-24 17:54:18,836 - INFO - Testing connection to Weaviate at http://localhost:8080...

2025-06-24 17:54:18,837 - INFO - ✅ Connection test successful!

2025-06-24 17:54:18,837 - INFO - Getting database information from http://localhost:8080...

2025-06-24 17:54:18,843 - INFO - Version: 1.28.4

2025-06-24 17:54:18,843 - INFO - Schema classes: XKCDComic

2025-06-24 17:54:18,843 - INFO - XKCDComic count: 3090

Next, we’ll carry out the RAG with “centripetal” as the search term:

$ python -m src.search.query --query "centripetal" --do-rag

2025-06-24 17:56:35,357 - INFO - Connecting to Weaviate at http://localhost:8080

2025-06-24 17:56:35,364 - INFO - Connected to Weaviate successfully

Searching for comics with query: 'centripetal'

2025-06-24 17:56:35,364 - INFO - Searching for comics with query: 'centripetal'

2025-06-24 17:56:55,209 - INFO - Found 3 comics matching query

Found 3 results:

1. Comic #123: Centrifugal Force

Explanation: In the XKCD comic, Black Hat claims that centrifugal force will kill James Bond on a centrifuge,

but Bond corrects him by saying it's actually centripetal force. The comic humorously plays on the

common misconception of centrifugal force and the technicalities of Newtonian mechanics.

This comic relates to centripetal force by highlighting the confusion between the two forces and how they are often

misunderstood or misrepresented in popular culture.

2. Comic #2973: Ferris Wheels

Explanation: In the XKCD comic, three Ferris wheels are connected using a belt drive system, causing the wheels

to rotate at different speeds, with the third wheel spinning dangerously fast. The comic humorously

explores the consequences of trying to link up Ferris wheels in this way, highlighting the absurdity

of the situation and the potential dangers of such a setup.

This comic relates to centripetal force by showing how the rotational motion of the Ferris wheels can be altered and

amplified through mechanical connections, resulting in extreme speeds and forces that would not be safe for passengers.

3. Comic #226: Swingset

Explanation: In the XKCD comic, Cueball imagines becoming weightless at the peak of his swing, leading to a funny

scenario where he tries to fly using a pocket fan as a propeller. This relates to centripetal force

because at the apex of the swing, there is a moment of weightlessness due to the absence of centripetal

force, but in reality, Cueball would come crashing down if he attempted to fly with a pocket fan.

You can see that the search + generation takes approximately 20 seconds, mainly slowed by the generation part - without this, it returns in about 1 second.

Visualization

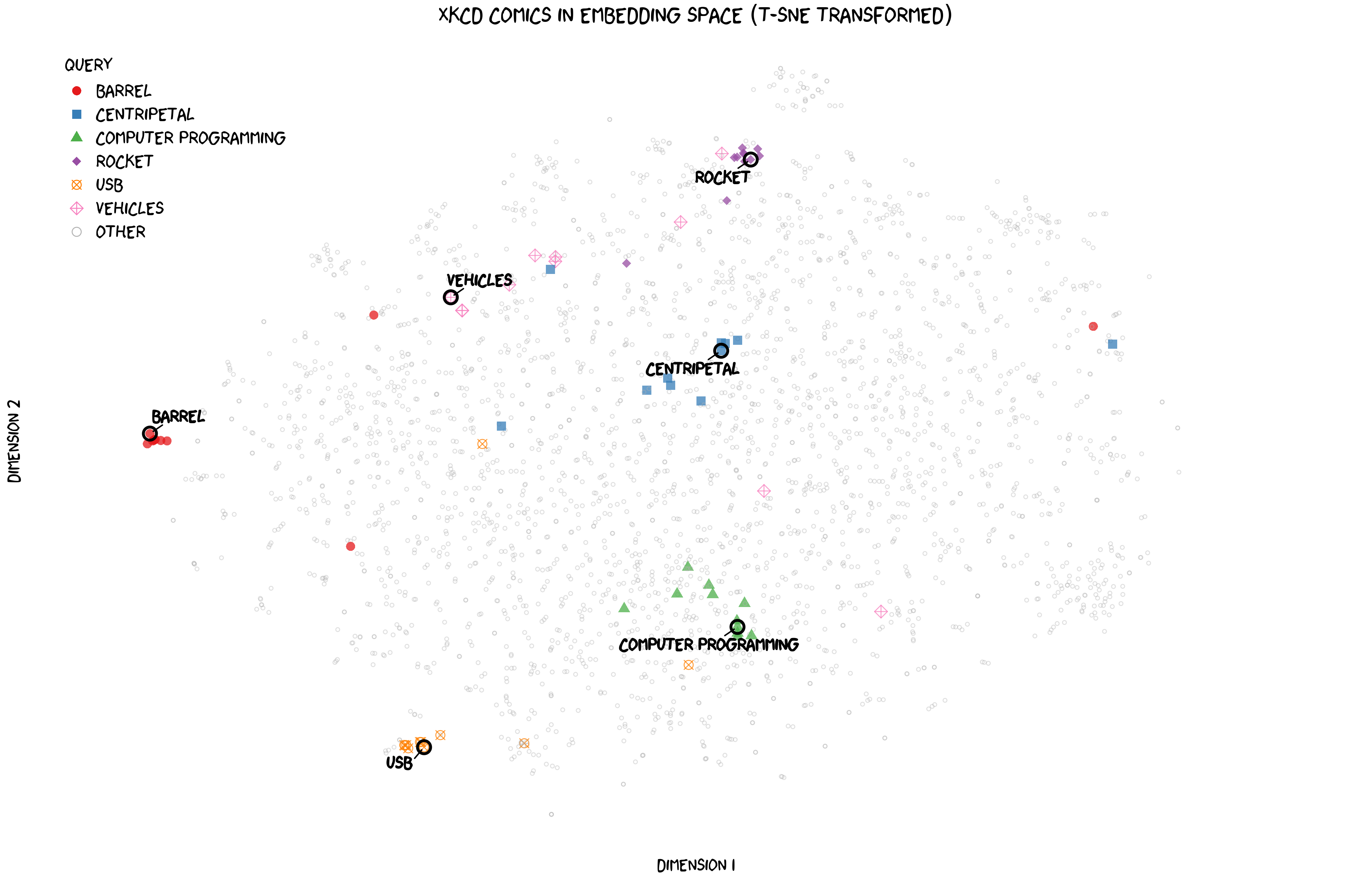

To better understand how the semantic search operates in practice, I created a visualization of the entire XKCD comic space, reduced from the original 1,536 dimensions to just two using t-SNE2. Generally, those points closer to nearer each other in the high-dimensional space will remain so in this low-dimensional projection, though the mapping is never perfect.

In the visualization above, each point represents one of the first 3,000 XKCD comics in the archive. I’ve embedded and highlighted six different search terms, along with the top 10 retrieved comics. Each query group is shown in a distinct colour and shape, except those not relating to any query (the majority, in fact), which are in grey. This gives us some insight into how the embedding model groups similar concepts together in vector space, albeit it through this low-dimensional lense.

The clustering reveals some interesting patterns. Comics about similar topics usually group together along with the embedded query. For instance, most of the comics relating to “rocket” are near to that (embedded) single-word query term. Most groups have this phenomenon - which you would expect to see if everything’s working correctly - but there is some variation in how close they cluster around their query - comics relating to “computer programming” are somewhat spread out, whereas the neighbours of “barrel” are very tightly huddled (but for a few outliers).

The exceptional group is “vehicles” whose points are spread quite far from the query term, perhaps suggesting that it’s quite a diverse group, referencing everything from cars to skateboards to houseboats! Interestingly, the “vehicles” point very close to the “rocket” group is indeed spacecraft-related: it’s called “Payloads” (#1461).

Conclusion

In this project, I’ve harnessed the power of Retrieval Augmented Generation to transform how we explore the vast and brilliant XKCD archive. By employing semantic search, through vector embeddings and powerful algorithms (like HNSW), I built a comic-lookup system that not only efficiently retrieves relevant comics, but can also compliment this search with context-aware generated descriptions.

This project underscores the potential of semantic search in bridging technology with beloved content, which allows us to explore the profound connections within XKCD’s universe. Watch this space for the online tool …

Please don’t forget to hit the ⭐ button on GitHub if you’ve enjoyed reading about this project!

References

-

Malkov, Y., & Yashunin, D. (2020). Efficient and robust approximate nearest neighbour search using Hierarchical Navigable Small World graphs. IEEE Transactions on Pattern Analysis and Machine Intelligence, 42(4), 824-836. ↩︎

-

Van der Maaten, L., & Hinton, G. (2008). Visualizing Data using t-SNE. Journal of Machine Learning Research, 9(11). ↩︎